A while back, I stumbled on this video: https://www.youtube.com/watch?v=ahsO5bhBUtk. In it, he goes through various facts and figures for the PlayStation and Xbox controllers. The one that piqued my interest the most is polling rate (or rather, "true update rate"). Just looking at current gen, we have the DualSense and whatever Microsoft is calling the new Xbox controller. Over a wired connection, the DualSense defaults to 250 Hz but can be overclocked to 1000 Hz. Xbox controllers, meanwhile, are stuck at 124 Hz. This got me wondering how much this matters and how much is enough.

-Last gen, we were lucky to get games that would even try to hit 60 FPS, so even at a low rate of 124 Hz, that's still two updates per frame (or four at 30 FPS) on the Xbox side. I can see why they would think this was enough then, but if that's still what they are using (as the video suggests), why? A major selling point of the consoles is 120 FPS support, is one update per frame enough?

-On the flip side, the DualSense updates far more often, but, when checking it via a gamepad tester on PC, the sticks seem to have a lot of jitter. These leads me to wonder if the higher polling/update rate is actually a benefit or if it would be better off with a lower rate.

-Microsoft has made a big deal about their Dynamic Latency Input, or DLI, for the new Series consoles and controllers. According to that page, the controller captures button presses "as fast as 2ms." But the video shows it's still updating at 124 Hz. So does that mean that it is polling internally at 500 Hz but only reporting updates at 124 Hz? Is the additional benefit only available on console (the PC drivers haven't changed for the new controllers, so that's possible)? Does it even matter? And if, as they claim, there's no negative hit to the battery life, does polling rate really have a detrimental effect on battery life in the first place as many would suggest it does?

Obviously, I have none of the answers, that's why I'm posting here. But I'm very interested to hear what people with more knowledge have to say about it. I'm mostly curious if there are good reasons for Microsoft to be doing what they are doing.

Controller polling rates - how much do they matter?

-

thebigcheese

- Posts: 707

- Joined: Sun Aug 21, 2016 5:18 pm

-

NewSchoolBoxer

- Posts: 369

- Joined: Fri Jun 21, 2019 2:53 pm

- Location: Atlanta, GA

Re: Controller polling rates - how much do they matter?

I think about this too. Basically, controller polling rates matter "a little". Other factors to consider. A good reaction time is 300 ms. Simplifying the analysis, polling at 250 Hz adds [1/2](1/250) seconds for +2 ms and 1000 Hz adds [1/2](1/1000) seconds for +0.5 ms. The one half factor is an average from a uniformly distributed button press. Could get lucky and press right before the polling or unlucky and right after.

The 1.5 ms reduction from 1000 Hz polling is 9% of a frame at 60 fps. Assuming game has a once per frame logic update window, that seems nice to have more leniency for optimal timing. But really, that's nothing compared to chugging a few hundred mg of caffeine to drop your reaction time to 280 ms.

You have to realize the marketing hustle of getting people to buy stuff they don't need. Are you a pro first person shooter player or getting sponsored for speedrunning?

--------

Processing controller input on PC over USB or WiFi or Bluetooth to hardware to video game software also adds time. Should be said that 60 fps CRT takes half that time to update mid point of screen (8.3 ms) and full 16.67 ms to update the bottom. Modern LCD and OLED update whole screen many, many times faster...but they have slower pixel response times.

That all ignores the time the game takes to process your input. Super Mario World famously has 2 frames of input delay from you hitting B to sprite starting to jump. Typical Switch game lags 5-7 frames and PC and more powerful consoles are more like 2-4. Run-ahead on software emulator can reduce overall input lag to be faster than native hardware + CRT. Not getting into a cheating discussion.

--------

Dude can record in 640 fps slow motion, talk about not messing around. I thought I was high end for having 240 fps on my iPhone.

I completely expected you to link the Shmups Junkie input lag video on Switch. Has a "Fact or Fiction" video where he shows Switch -> 100 ft HDMI cable + matrix + 50 ft CAT5 cable + matrix + 25 ft HDMI cable adds no input lag from just using the 25 ft cable lol. Data over a cable moves at about 2/3 the speed of light. That's 667 feet in 1/1000 of a millisecond. Note that sending over WiFi or Bluetooth could be a bad idea if many other nearby devices are sharing the respective network. Packets could be dropped.

The 1.5 ms reduction from 1000 Hz polling is 9% of a frame at 60 fps. Assuming game has a once per frame logic update window, that seems nice to have more leniency for optimal timing. But really, that's nothing compared to chugging a few hundred mg of caffeine to drop your reaction time to 280 ms.

You have to realize the marketing hustle of getting people to buy stuff they don't need. Are you a pro first person shooter player or getting sponsored for speedrunning?

--------

Processing controller input on PC over USB or WiFi or Bluetooth to hardware to video game software also adds time. Should be said that 60 fps CRT takes half that time to update mid point of screen (8.3 ms) and full 16.67 ms to update the bottom. Modern LCD and OLED update whole screen many, many times faster...but they have slower pixel response times.

That all ignores the time the game takes to process your input. Super Mario World famously has 2 frames of input delay from you hitting B to sprite starting to jump. Typical Switch game lags 5-7 frames and PC and more powerful consoles are more like 2-4. Run-ahead on software emulator can reduce overall input lag to be faster than native hardware + CRT. Not getting into a cheating discussion.

--------

Dude can record in 640 fps slow motion, talk about not messing around. I thought I was high end for having 240 fps on my iPhone.

I completely expected you to link the Shmups Junkie input lag video on Switch. Has a "Fact or Fiction" video where he shows Switch -> 100 ft HDMI cable + matrix + 50 ft CAT5 cable + matrix + 25 ft HDMI cable adds no input lag from just using the 25 ft cable lol. Data over a cable moves at about 2/3 the speed of light. That's 667 feet in 1/1000 of a millisecond. Note that sending over WiFi or Bluetooth could be a bad idea if many other nearby devices are sharing the respective network. Packets could be dropped.

Faster polling is always better. The gain may not be humanely perceptible and is diminishing returns. I mostly answered this but one more thing. Just because the video game system polls at X times per second, doesn't mean the record of button presses is used X times per second. Let's look at the SNES. Hardware polls once per frame so once every 16.67 ms in NTSC. SNES microprocessor has to execute LDA 4218 to load player 1's controller input into the accumulator. That only happens when the pointer gets to that part in the game's code. If lagging then not executing once per frame. Could check player input many times per frame if that's what the developers wanted to do. Can only send data to update the screen once per frame during the vertical blanking window, so probably not checking more than that even if the [1/2](1/60) seconds window would be reduced. Got bigger problems to worry about when programming on an SNES.thebigcheese wrote: -Last gen, we were lucky to get games that would even try to hit 60 FPS, so even at a low rate of 124 Hz, that's still two updates per frame (or four at 30 FPS) on the Xbox side. I can see why they would think this was enough then, but if that's still what they are using (as the video suggests), why? A major selling point of the consoles is 120 FPS support, is one update per frame enough?

-On the flip side, the DualSense updates far more often, but, when checking it via a gamepad tester on PC, the sticks seem to have a lot of jitter. These leads me to wonder if the higher polling/update rate is actually a benefit or if it would be better off with a lower rate.

Re: Controller polling rates - how much do they matter?

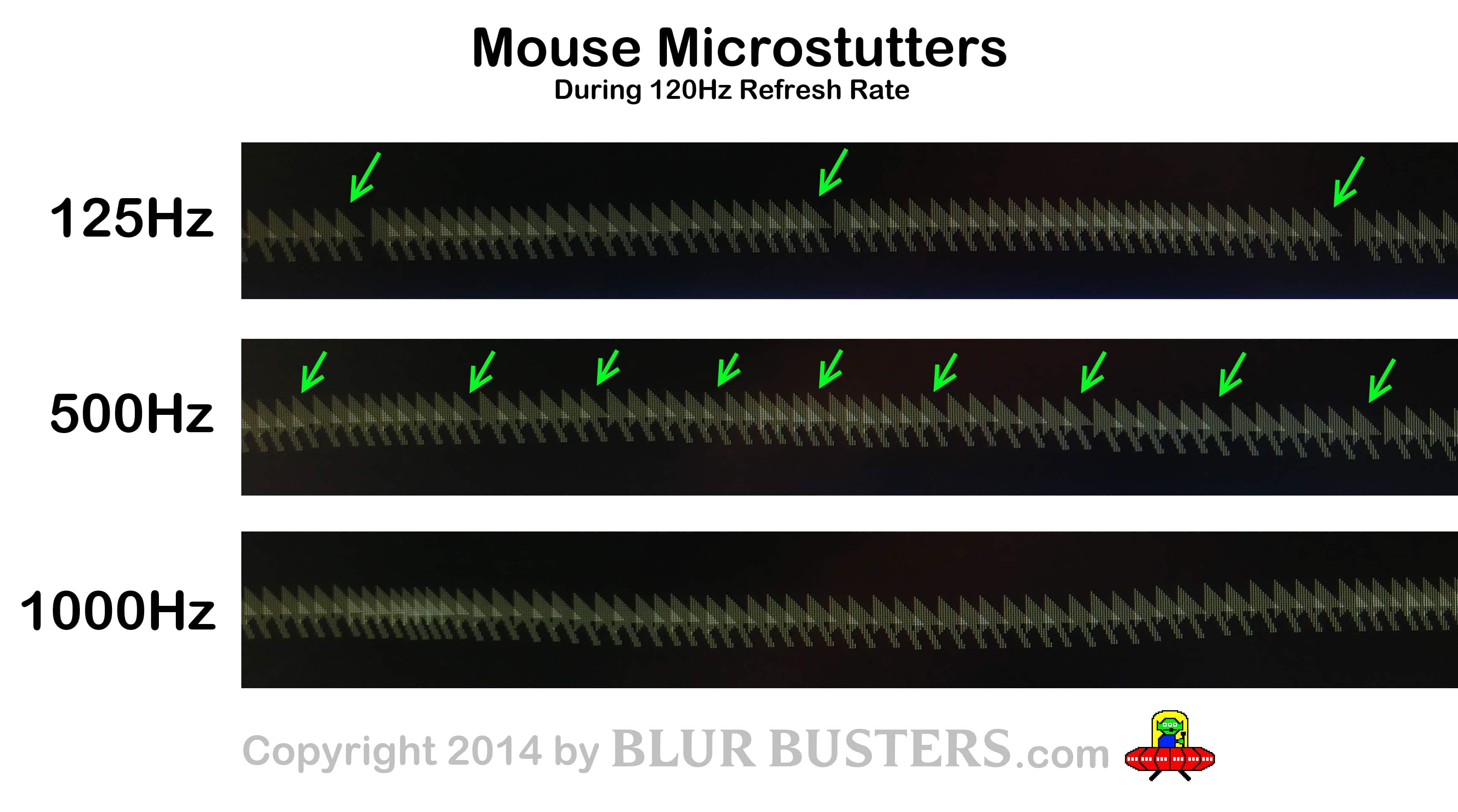

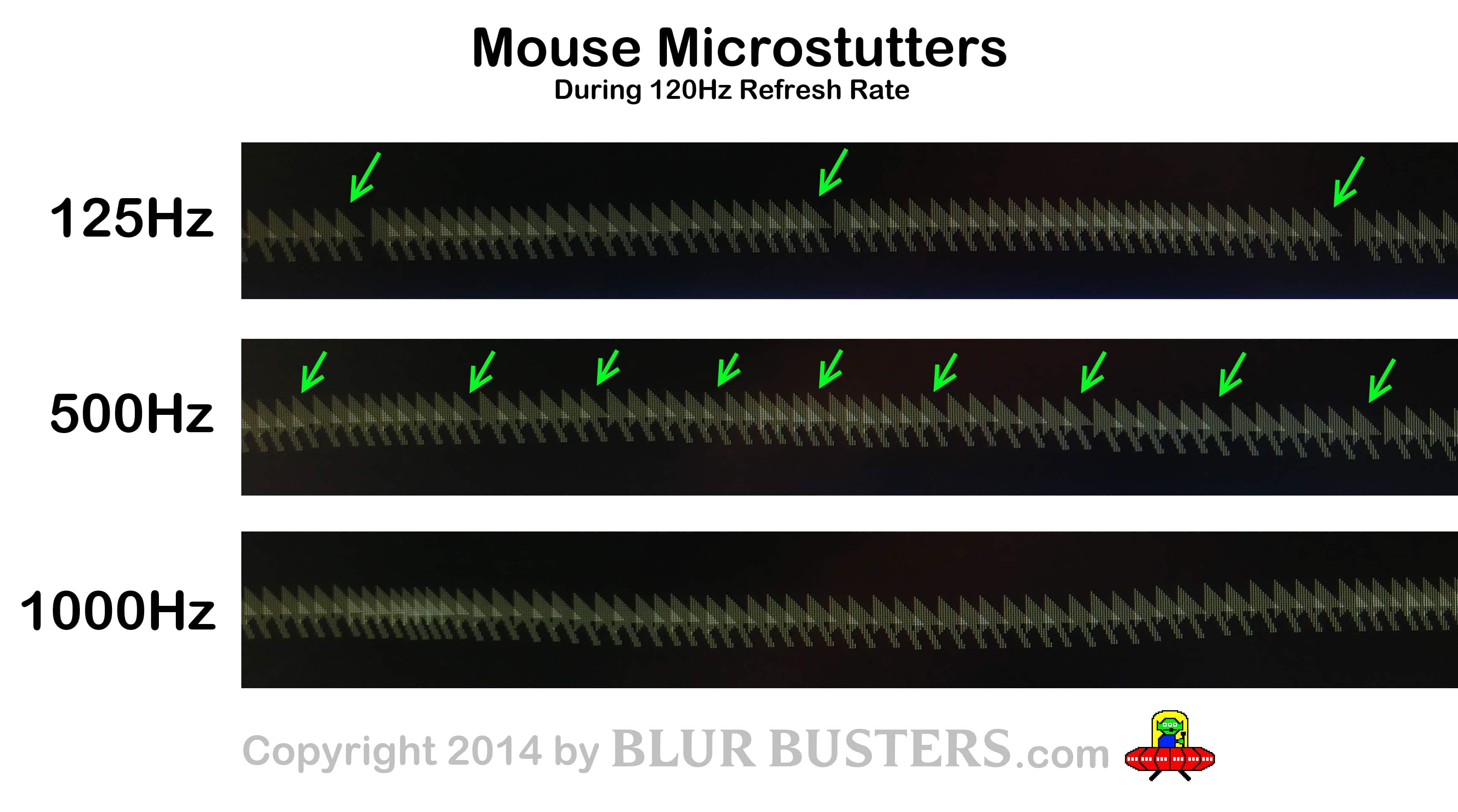

Latency isn't the only factor, there's also consistency to consider. This is for mice, but I'd suspect something similar applies here:

https://blurbusters.com/faq/mouse-guide/

This image shows that if your polling rate isn't perfectly aligned with your refresh rate (and it probably never would be), you can see benefits in consistency even up to 1000Hz:

In terms of polling rates and latency, consider that if you have (say) a 100Hz polling rate, that's adding up to 10ms of latency on top of all the other sources of latency in the pipeline.

In terms of polling rates and latency, consider that if you have (say) a 100Hz polling rate, that's adding up to 10ms of latency on top of all the other sources of latency in the pipeline.

This video from Microsoft Applied Research also does a really good job at visualizing latency using a touchscreen. They show 100ms, 10ms, and 1ms. Note that even at 10ms there is visibly obvious rubber banding of the box around the finger. Jump to around 50 seconds in:

https://www.youtube.com/watch?v=vOvQCPLkPt4

Personally, I run my mouse (G502 Lightspeed) at 500 Hz, because anything below that feels less smooth, and 1000Hz feels strange to me (somehow less responsive, can't explain it).

https://blurbusters.com/faq/mouse-guide/

This image shows that if your polling rate isn't perfectly aligned with your refresh rate (and it probably never would be), you can see benefits in consistency even up to 1000Hz:

Spoiler

This video from Microsoft Applied Research also does a really good job at visualizing latency using a touchscreen. They show 100ms, 10ms, and 1ms. Note that even at 10ms there is visibly obvious rubber banding of the box around the finger. Jump to around 50 seconds in:

https://www.youtube.com/watch?v=vOvQCPLkPt4

Personally, I run my mouse (G502 Lightspeed) at 500 Hz, because anything below that feels less smooth, and 1000Hz feels strange to me (somehow less responsive, can't explain it).

-

thebigcheese

- Posts: 707

- Joined: Sun Aug 21, 2016 5:18 pm

Re: Controller polling rates - how much do they matter?

The latency part I am less interested in, it's more in terms of accuracy and potential for missed inputs. In that regard, is 124 Hz enough? Does DLI adequately compensate for that if not? Is 800 Hz actually better in any of these regards? The video suggests that having more data points means less interpolation and therefore increased accuracy, but I have to wonder how much of that is theory and how much is reality.

-

NewSchoolBoxer

- Posts: 369

- Joined: Fri Jun 21, 2019 2:53 pm

- Location: Atlanta, GA

Re: Controller polling rates - how much do they matter?

Let's assume (relatively) simple case of rate the game looks at the RAM of the mouse's position + keys being pressed is equal to the GPU's rate it sends video to the monitor and both are in phase. As in, you have a nice 165 Hz monitor like me, then you otherwise only need to know the game's logic rendering rate and the mouse polling rate.thebigcheese wrote:The latency part I am less interested in, it's more in terms of accuracy and potential for missed inputs. In that regard, is 124 Hz enough? Does DLI adequately compensate for that if not? Is 800 Hz actually better in any of these regards? The video suggests that having more data points means less interpolation and therefore increased accuracy, but I have to wonder how much of that is theory and how much is reality.

Let's look at cases of 60 Hz and 165 Hz monitor and 125 Hz mouse and 1000 Hz mouse. Incidentally, I found a mouse tracker program that says all mine are 125 Hz. We're dealing with linear and periodic functions. Their average value then is the (min + max) / 2. As in, if min delay is 10 ms and max is 90 ms then the average delay is (10 + 90)/2 = 50 ms.

Monitor refresh rate is f

Game's rate it polls the RAM for mouse position is g

Mouse's polling rate is p (m would be confused with milliseconds ms)

Let's start with relationship of p to g and come back to f at the end. Min delay ignoring f is 0 due to very lucky chance of p and g polling at the same exact time. What we need then is the max delay.

If p is greater than g then max possible delay is p polling right after g does. This means the max delay is one period of g - one period of p = 1/g - 1/p, if one period of p cannot fit completely inside g. ie, period of p is greater than half of g's. If it's less than half then we need to determine how many periods of p fit inside g. Let's use math magic to combine both cases into one formula:

max delay = period of p * ratio of p from g = (1/p) * [|p - g| / p]

What is the average case? It's half that.

We are considering p of 125 Hz and 1000 Hz. Periods are 1/125 = 8 ms and 1/1000 = 1 ms. While we can't measure g directly without hacking, it's probably as low as 30 Hz for an old 480i game and as high as 165 Hz. I've seen video of super computer rendering 7000 fps that monitor capped to 120 or 165 Hz. That 7000 would be the g here. In that extreme case, delay is very slightly higher than half the period of p.

For a g of 100 Hz and p of 125 Hz, we know from (p - g)/p = 0.25, meaning 25% of the period of p is the max delay. This means g is always between 0 (min) and 0.25 (max) of p's period behind p. This means the average delay is half that = (1/2) * (1/p) * [(p - g) / p] = (1/2) * 0.25/p = 0.125 * period of p = 0.125 * 8 ms = 1.0 ms.

If m speeds up to 1000 Hz then delay is (1/2) * (1/p) * (1000 - 100) / 1000 = (1/2)*0.9/p = 0.45 * period of p = 0.45 * 1 ms = 0.45 ms.

So for a game logic updating rate of 100 Hz, the mouse polling makes the game's delay 1.0 ms on the low end (125 Hz) and 0.45 ms on the high end (1000 Hz).

Now what about the monitor? We said it's in sync with g so add one cycle of the monitor to draw the screen, yielding 1/f = 16.7 ms for 60 Hz and 6.06 ms for 165 Hz.

So total delay you would experience ranges from 6.51 ms with fast monitor and mouse to 17.67 ms with slow monitor and mouse.

What about matching p and g to be 100 Hz or p an exact multiple of g? Then your max error is the period length if p is infinitesimally before m, meaning average error is 1/2 that, or (1/2) * period of g * (g/p). Would be 0.50 ms for both at 100 Hz. Compare to 0.45 ms at 125 Hz mouse polling.

You're better off maximizing m than matching p.

Yes, upgrading your mouse from 125 Hz to 1000 Hz would help, a little. 165 Hz monitor with graphics card that can sync with G-Sync (Nvidia) or FreeSync (AMD) is the more important upgrade though.