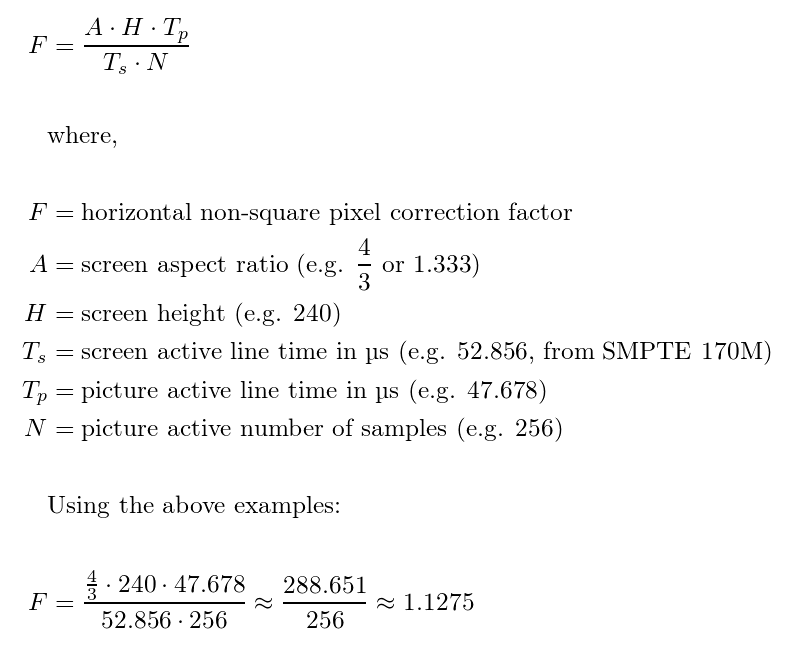

Fudoh wrote:this has been my method since I started to wonder about it.

The core point here is that the actual horizontal resolution doesn't really play into it. Active scanline timing for Genesis 256px and 320px modes is almost identical.

The often refered to method explained on the Nesdev wiki* is much more complicated and I honestly can't follow it through, because I don't understand how the pixel aspect ratio suddenly plays into it, when you can completely ignore it for followig the above method.

*

https://wiki.nesdev.com/w/index.php/Overscan

I believe you were quite close to the answer. I'll touch upon a few aspects you're probably familiar with, but just to get the whole picture for everyone (and/or the possibility that I'm corrected/complemented and learn something as a result), allow me to go back a bit.

In analog video, there is no horizontal resolution(*), because there are no pixels, which is why active/total line extent is stated in terms of a time duration (in digital video, you have additional means of expressing that). Because there are no pixels, there is, consequently, no pixel aspect ratio. The only thing video signal norms for conventional analog television systems expected in that regard was that the signal be projected onto a rectangular visible area that had a 4:3 aspect ratio resulting from the physical length of two adjacent sides. Of course, the visible area was usually not what you'd call rectangular, but this is what was idealized. In case a given analog signal was deemed as not properly fitting onto said area, people were expected to "turn the knobs" on their TV, if available, until the image neatly filled out the visible area (colloquially, the "screen"). There was no standard that defined the exact structure of a TV screen (which varied across different CRT TV products), or how exactly the video signal should be projected onto it -- in other words, which exact portion of the video signal the 4:3 spec applied to.

The methodology presented here is only applicable when the video signal takes a wholly digital path, from generation to display (e.g. emulation, FPGAs in combination with a contemporary LCD display), and to then recreate an aspect ratio the originally analog signal would plausibly have when displayed on a (conventional) analog TV screen. When an analog signal is displayed on an analog screen instead, and you think the proportions are off, you "turn the knobs". When an analog signal is sampled for digital display instead, the display aspect ratio additionally depends on the way it's sampled, and things get more complicated. If OP's comparison shots originated from emulation, with a square-pixeled rendering being the baseline, the methodology is a fine approximation to how it would be displayed on a CRT. It'd be a mistake, however, to take OP's comparison shots as a paradigm showing how proportions would look on each and every CRT exactly – there is no such thing, you'd be chasing a phantom.

The Nesdev wiki article, then, talks about pixel aspect ratio because it adopts a digital video perspective, where the NES' analog signal output is sampled. Being in the digital video realm, we now have pixels as well as a horizontal resolution, and pixel aspect ratio then comes into play because the display aspect ratio – the aspect ratio of the image as displayed – is defined by storage aspect ratio × pixel aspect ratio. Pixel aspect ratio itself depends on the interplay between the pixel rate (if the analog source signal is generated by a digital circuit like in this case, as opposed to say, an analog video camera) and the parameters that are used for sampling the signal for digital use. If you want to hone in on an aspect ratio that could plausibly manifest if a CRT was fed with the same underlying analog signal, you have to choose those parameters carefully. For example, if you straightaway sample a 525 total lines/NTSC signal according to the Rec. 601 standard, you get non-square pixels with an aspect ratio of 10:11.

I'm not sure right now if this perspective inevitably applies to emulation, I guess depending on how you as a developer emulate the PPU and render video output, it could, but doesn't have to. I'm quite sure it shouldn't matter for the user. When using emulation or an FPGA in combination with a contemporary digital display, I'd always refer to OP's method. Deriving an aspect ratio correct factor by taking on a sampling viewpoint in this case is not "wrong", just like OP's method is not "right", but it only muddles things. The article addresses a different purpose.

Just a side-note, the article mentions CRTs, so one should be mindful not to mix analog and digital video concepts in one's understanding here, but the specific reasonings behind its mentions of CRTs in some contexts seem coherent to me.

(*) There is an effective maximum subjective, optical horizontal resolution of CRT screens, which is called TV lines, but that has to do with the respective CRT's build and the transmission medium, not the analog video signal itself (not to be confused with scanlines, the analogy for the vertical dimension is called the Kell factor; effective vertical resolution = number of active lines per frame × Kell factor).