OSSC (DIY video digitizer & scandoubler)

-

Powerman293

- Posts: 5

- Joined: Mon May 06, 2019 6:33 am

Re: OSSC (DIY video digitizer & scandoubler)

Working on capturing some FF7 for a video. How strong should the scanlines be for video capture?

Re: OSSC (DIY video digitizer & scandoubler)

Sorry if this has been asked before all:

I am trying to figure out a way to scale my Irem M72 (R-Type) properly.

The issue is the system's native 384x256 resolution. I end up losing the bottom part of the screen or if I modify: SAMPLING OPTIONS>ADV. TIMING 240P>V SYNCLEN 6>V BACKPORCH 30

With this I am able to scroll the image up but then I lose the top of the screen.

On my CRT this game needs to be crushed to fit with the vertical size adjustment. I am wondering if there is a similar setting I can use on the OSSC to crush this game into a 4:3 window.

I am trying to figure out a way to scale my Irem M72 (R-Type) properly.

The issue is the system's native 384x256 resolution. I end up losing the bottom part of the screen or if I modify: SAMPLING OPTIONS>ADV. TIMING 240P>V SYNCLEN 6>V BACKPORCH 30

With this I am able to scroll the image up but then I lose the top of the screen.

On my CRT this game needs to be crushed to fit with the vertical size adjustment. I am wondering if there is a similar setting I can use on the OSSC to crush this game into a 4:3 window.

-

XtraSmiley

- Posts: 697

- Joined: Fri Apr 20, 2018 9:22 am

- Location: Washigton DC

Re: OSSC (DIY video digitizer & scandoubler)

It's been awhile since I messed with M72, but here is what I wrote about it then:EVN wrote:Sorry if this has been asked before all:

I am trying to figure out a way to scale my Irem M72 (R-Type) properly.

The issue is the system's native 384x256 resolution. I end up losing the bottom part of the screen or if I modify: SAMPLING OPTIONS>ADV. TIMING 240P>V SYNCLEN 6>V BACKPORCH 30

With this I am able to scroll the image up but then I lose the top of the screen.

On my CRT this game needs to be crushed to fit with the vertical size adjustment. I am wondering if there is a similar setting I can use on the OSSC to crush this game into a 4:3 window.

HAS=TTL, OSSC=Pre/Post Coast 1, 3x mode looks best as it doesn't cut off top or bottom but is 720p and 5x mode is 1080p, however the top and bottom are cut off. Also, bottom layer of this games screen is cut off (score, lives, time layer) at the default settings. To see it you have to adjust the settings under Advance Timing Tweaker. I set Vertical Sync Length to 6, Vertical Backporch Length to 30, and Vertical Active Length to 240. This allows you to see the full bottom line information and graphics, however the top is now cut off a bit. You could cut back on my settings to see the numbers, giving up a little bit of the graphics but gaining back the top line. Your call how you rock this.

EDIT: Oh crap, I see you are using my suggested settings already! LOL, sorry. Yeah, I guess crushing it would be best, but I don't think I came back around to finding a solution, so I guess I'll hang around and see if someone posts!

-

thchardcore

- Posts: 498

- Joined: Wed Jun 22, 2005 9:20 am

- Location: Liberal cesspool

Re: OSSC (DIY video digitizer & scandoubler)

Hopefully this isn't taken the wrong way, but I am very happy with the OSSC for SFC, MD, PS1+2,N64 and Saturn. For arcade boards, I use the Retro Scaler A1 as it handles everything except some late Toaplan V2 boards.

The OSSC is the ultimate console line doubler/upscale but it isn't centered around arcade hardware.

The OSSC is the ultimate console line doubler/upscale but it isn't centered around arcade hardware.

A camel is a horse designed by a committee

Re: OSSC (DIY video digitizer & scandoubler)

The reason here is likely based on the desire to sort output resolutions into TV-"compatible" sets of resolutions.

The 240p 2x option is basically meant to produce a compatible 480p output signal and the 3x is meant to produce something at least resembling a valid 720p signal.

Doubling the input signal is M72 board is tough, at it would produce a resolution of 512p while decreasing the refresh rate to 55 or 56Hz (or whatever the M72 boards run at).

My best guess is that there's no technical reason that the OSSC shouldn't be able to handle this, but IT IS more than likely to cause a lot of problems on the output side. If used with a capable secondary scaler or with a straight HDMI to RGBHV converter, this should be possible though. (EDIT: refer to the reply below mine for the technical how-to)

The 240p 2x option is basically meant to produce a compatible 480p output signal and the 3x is meant to produce something at least resembling a valid 720p signal.

Doubling the input signal is M72 board is tough, at it would produce a resolution of 512p while decreasing the refresh rate to 55 or 56Hz (or whatever the M72 boards run at).

My best guess is that there's no technical reason that the OSSC shouldn't be able to handle this, but IT IS more than likely to cause a lot of problems on the output side. If used with a capable secondary scaler or with a straight HDMI to RGBHV converter, this should be possible though. (EDIT: refer to the reply below mine for the technical how-to)

Last edited by Fudoh on Mon May 25, 2020 8:35 am, edited 1 time in total.

Re: OSSC (DIY video digitizer & scandoubler)

Increase V.Active to 256. Whether your display can handle the format is another question. But you actually end up with a rather standard frame for the signal if using generic x4 mode (1280x1024), how far from standard it is depends also on total lines (we know already the framerate is quite way off). It'd basically following the tips for x3 & x4 modes here: https://videogameperfection.com/forums/ ... lx5-modes/EVN wrote:

On my CRT this game needs to be crushed to fit with the vertical size adjustment. I am wondering if there is a similar setting I can use on the OSSC to crush this game into a 4:3 window.

Bottom line is, if the system outputs 256 active video lines, and you want to have all of them in your picture, then you need to adjust so they fit within the V.Active.

Re: OSSC (DIY video digitizer & scandoubler)

On the one hand, due to the wide variety of analog signals you can throw at the OSSC, and because the output digital video timings are always closely coupled to the input analog video timings, the output timings vary accordingly across different signal sources, even regardless of the effective OSSC settings. On the other hand, while I don't have an ironclad overview, I doubt that it's a black-and-white situation where there are those capture devices that accept everything an OSSC will (for all practical intents and purposes) throw at them, and those devices that very strictly only accept standard timings. Rather, my everyday informal experience with different source/sink combinations and online reports by others suggest that there's more than just two degrees of tolerance across different capture devices (just like with displays).Fudoh wrote:not really. It's comes down to the capture unit's ability to lock to non standard timings, since the OSSC "tricks" to achieve any resolution higher than 480p and isn't able to output timings close to the VESA or SMPTE standard timings.Isn't "OSSC compatibility" dependent on the signal source?

Even without going into technical details, observe that the lion's share of video game systems the OSSC is typically fed with output non-standard timings, while some specific cases of signal sources are known for causing problems on the sink side more often than others (while the OSSC itself successfully locks onto the signal); examples include the PS1 outputting 314 total lines for a share of games in 50Hz mode, and the SNES' jitter issue. In both cases, there are sinks that accept the resulting TMDS signal without further issues, and there are sinks for which this is not the case.

In light of this, wouldn't it be an oversimplification to speak of "OSSC (in)compatibility" while only factoring in either the source- or the sink side of the chain?

Or was I merely misunderstanding and you actually just meant something like "OSSC friendliness" or "good" compatibility?

Re: OSSC (DIY video digitizer & scandoubler)

Thanks man, I messed with that setting and this works:Harrumph wrote:Increase V.Active to 256. Whether your display can handle the format is another question. But you actually end up with a rather standard frame for the signal if using generic x4 mode (1280x1024), how far from standard it is depends also on total lines (we know already the framerate is quite way off). It'd basically following the tips for x3 & x4 modes here: https://videogameperfection.com/forums/ ... lx5-modes/EVN wrote:

On my CRT this game needs to be crushed to fit with the vertical size adjustment. I am wondering if there is a similar setting I can use on the OSSC to crush this game into a 4:3 window.

Bottom line is, if the system outputs 256 active video lines, and you want to have all of them in your picture, then you need to adjust so they fit within the V.Active.

V synclen = 6

V backporch = 14

V active = 260

-

Dyingspade

- Posts: 1

- Joined: Fri May 29, 2020 6:48 pm

Re: OSSC (DIY video digitizer & scandoubler)

Hi,

Looking for some help please.

I am having an issue with my ossc with ps1 games Played on a ps2, but only in 320 optim.

All other ps1 modes work fine with optimal timings, as do all my other consoles.

I Am using optimal timings and the image is perfectly sharp, but I get very faint, horizontal wavy lines On the image.

I can’t get rid of it by adjusting the phase, and it happens on 2 different ps2s.

Anyone have any ideas please?

Looking for some help please.

I am having an issue with my ossc with ps1 games Played on a ps2, but only in 320 optim.

All other ps1 modes work fine with optimal timings, as do all my other consoles.

I Am using optimal timings and the image is perfectly sharp, but I get very faint, horizontal wavy lines On the image.

I can’t get rid of it by adjusting the phase, and it happens on 2 different ps2s.

Anyone have any ideas please?

-

bobrocks95

- Posts: 3624

- Joined: Mon Apr 30, 2012 2:27 am

- Location: Kentucky

Re: OSSC (DIY video digitizer & scandoubler)

Just realized this question made more sense in the OSSC thread- I'm not getting a picture on my LG OLED when using GBI High-Fidelity with a 360p output. I'm using the gbihf-ossc.dol file included by default, and tried the OSSC's 360p mode at passthrough (which didn't seem to sync at all), 2x, and 3x.

Since the OLED is compatible with everything else I've thrown at it through the OSSC, I figure I have something configured wrong with GBI.

EDIT: I'm using GCVideo-DVI 3.0d into a Portta DAC for component output.

Since the OLED is compatible with everything else I've thrown at it through the OSSC, I figure I have something configured wrong with GBI.

EDIT: I'm using GCVideo-DVI 3.0d into a Portta DAC for component output.

PS1 Disc-Based Game ID BIOS patch for MemCard Pro and SD2PSX automatic VMC switching.

Re: OSSC (DIY video digitizer & scandoubler)

It's a bit unclear to me, the OSSC has sync in 2x/3x, or it never has sync? What does the OSSC display show?bobrocks95 wrote: I'm using the gbihf-ossc.dol file included by default, and tried the OSSC's 360p mode at passthrough (which didn't seem to sync at all), 2x, and 3x.

-

bobrocks95

- Posts: 3624

- Joined: Mon Apr 30, 2012 2:27 am

- Location: Kentucky

Re: OSSC (DIY video digitizer & scandoubler)

It has sync in 2x, 2x 240x360, and 3x 240x360 modes, and none in passthru. Says 375p 22.5kHz 60Hz.Harrumph wrote:It's a bit unclear to me, the OSSC has sync in 2x/3x, or it never has sync? What does the OSSC display show?bobrocks95 wrote: I'm using the gbihf-ossc.dol file included by default, and tried the OSSC's 360p mode at passthrough (which didn't seem to sync at all), 2x, and 3x.

Maybe some advanced settings I could tweak to see if a picture shows up?

EDIT: Another startup has the OSSC flickering between 374p 60.16Hz and No Sync rapidly. This has happened 3 times in a row now trying to tweak various GC-Video settings.

PS1 Disc-Based Game ID BIOS patch for MemCard Pro and SD2PSX automatic VMC switching.

-

nmalinoski

- Posts: 1974

- Joined: Wed Jul 19, 2017 1:52 pm

Re: OSSC (DIY video digitizer & scandoubler)

To be clear, it's the display that isn't accepting the signal, not that the OSSC suddenly loses sync when put into passthrough, correct?bobrocks95 wrote:It has sync in 2x, 2x 240x360, and 3x 240x360 modes, and none in passthru. Says 375p 22.5kHz 60Hz.Harrumph wrote:It's a bit unclear to me, the OSSC has sync in 2x/3x, or it never has sync? What does the OSSC display show?bobrocks95 wrote: I'm using the gbihf-ossc.dol file included by default, and tried the OSSC's 360p mode at passthrough (which didn't seem to sync at all), 2x, and 3x.

Maybe some advanced settings I could tweak to see if a picture shows up?

EDIT: Another startup has the OSSC flickering between 374p 60.16Hz and No Sync rapidly. This has happened 3 times in a row now trying to tweak various GC-Video settings.

-

bobrocks95

- Posts: 3624

- Joined: Mon Apr 30, 2012 2:27 am

- Location: Kentucky

Re: OSSC (DIY video digitizer & scandoubler)

I would have said that before, but the last few times I tried it the OSSC was losing sync as well.nmalinoski wrote:To be clear, it's the display that isn't accepting the signal, not that the OSSC suddenly loses sync when put into passthrough, correct?bobrocks95 wrote:It has sync in 2x, 2x 240x360, and 3x 240x360 modes, and none in passthru. Says 375p 22.5kHz 60Hz.Harrumph wrote:

It's a bit unclear to me, the OSSC has sync in 2x/3x, or it never has sync? What does the OSSC display show?

Maybe some advanced settings I could tweak to see if a picture shows up?

EDIT: Another startup has the OSSC flickering between 374p 60.16Hz and No Sync rapidly. This has happened 3 times in a row now trying to tweak various GC-Video settings.

PS1 Disc-Based Game ID BIOS patch for MemCard Pro and SD2PSX automatic VMC switching.

Re: OSSC (DIY video digitizer & scandoubler)

When the signal is multiplied, it is really a standard (or at least really close to standard) 720p/1080p signal. You don't have to tweak anything on the OSSC btw (only if you use the HDcustom format, not HD60). So I suspect it's rather something with your Portta DAC, or the cabling in between possibly.bobrocks95 wrote:

Maybe some advanced settings I could tweak to see if a picture shows up?

EDIT: Another startup has the OSSC flickering between 374p 60.16Hz and No Sync rapidly. This has happened 3 times in a row now trying to tweak various GC-Video settings.

Re: OSSC (DIY video digitizer & scandoubler)

I am trying to get my analogue Mister FPGA Snes Nes and GBA cores to display via my OSSC

I have no issues with sega, Neo geo or PCE cores but the nintendo ones will not display. It appears to sync as the light is green and stays so but the screen is blank. They act like my original snes and Nes did before I did he dejitter mod, but I am told that the dejitter function has been implemented into these cores so they should display. Has anyone managed this?

I have no issues with sega, Neo geo or PCE cores but the nintendo ones will not display. It appears to sync as the light is green and stays so but the screen is blank. They act like my original snes and Nes did before I did he dejitter mod, but I am told that the dejitter function has been implemented into these cores so they should display. Has anyone managed this?

Re: OSSC (DIY video digitizer & scandoubler)

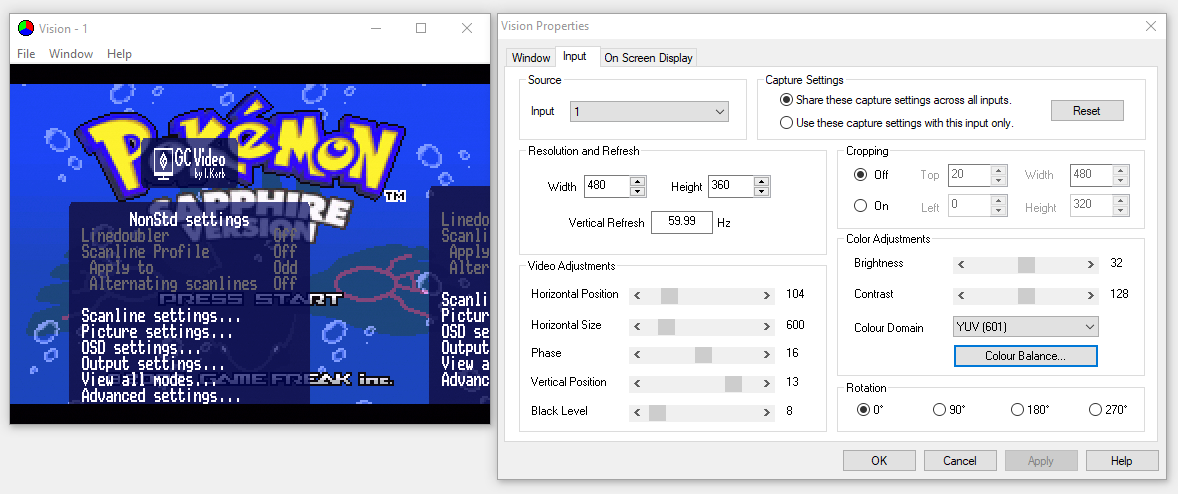

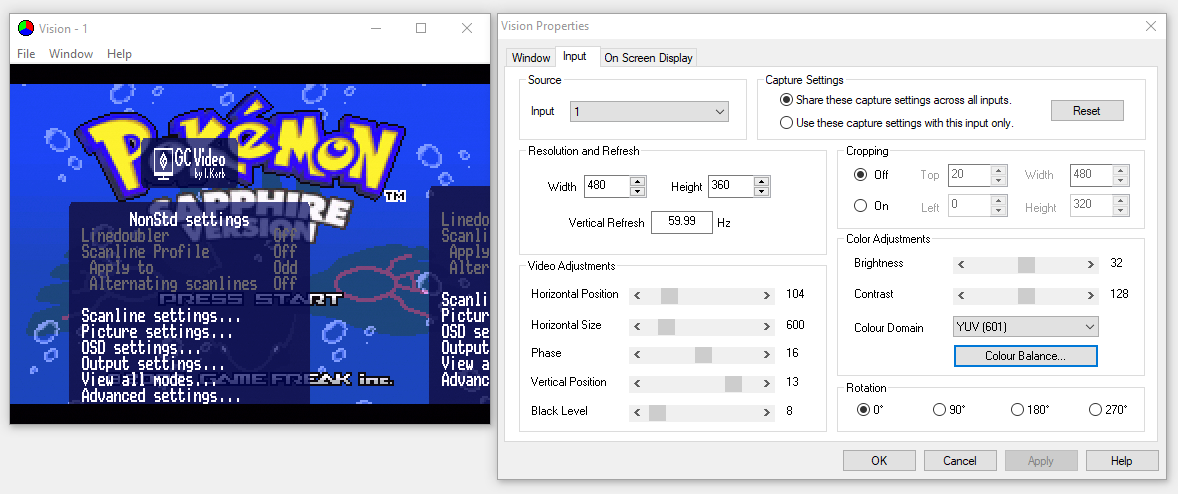

I've just bought this and it seem to be working fine directly to my Datapath VisionRGB card without any tweaking.bobrocks95 wrote:EDIT: I'm using GCVideo-DVI 3.0d into a Portta DAC for component output.

Looks like I have no choice but to buy an OSSC.

Re: OSSC (DIY video digitizer & scandoubler)

Did you try all vsync_adjust modes in the .ini ?LDigital wrote:I am trying to get my analogue Mister FPGA Snes Nes and GBA cores to display via my OSSC

I have no issues with sega, Neo geo or PCE cores but the nintendo ones will not display. It appears to sync as the light is green and stays so but the screen is blank. They act like my original snes and Nes did before I did he dejitter mod, but I am told that the dejitter function has been implemented into these cores so they should display. Has anyone managed this?

NES and SNES work @60.10Hz. Some TVs (notably LG) can have a problem with that.

Edit: OK, sorry, I'm dumb. You're using analog out. Yeah, they're probably imitating the original console's output exactly.

-

bobrocks95

- Posts: 3624

- Joined: Mon Apr 30, 2012 2:27 am

- Location: Kentucky

Re: OSSC (DIY video digitizer & scandoubler)

Let me at least remove my HDMI splitter from my chain Extrems and see if that does anything. Have you had other reports of sync issues with people using a DAC + OSSC?Extrems wrote:I've just bought this and it seem to be working fine directly to my Datapath VisionRGB card without any tweaking.bobrocks95 wrote:EDIT: I'm using GCVideo-DVI 3.0d into a Portta DAC for component output.

Looks like I have no choice but to buy an OSSC.

The carby preset works fine but of course that's in a standard 240p window.

PS1 Disc-Based Game ID BIOS patch for MemCard Pro and SD2PSX automatic VMC switching.

Re: OSSC (DIY video digitizer & scandoubler)

Yes, I've had someone else report the same symptoms.bobrocks95 wrote:Have you had other reports of sync issues with people using a DAC + OSSC?

Re: OSSC (DIY video digitizer & scandoubler)

I'm currently using an RGB cable directly plugged into the GC's analog AV port, and I also was never able to use the signal output by gbihf-ossc.dol+cli by feeding it into the OSSC: depending on OSSC settings, in some cases the OSSC does not manage to sync, and in all cases my monitors/HDMI capture cards show no image. If I feed it into a PVM 2053MD, the image looks like this, this is the title screen of Kururin Paradise, for reference. (edit, small addendum: the reason it looks like this is that it's a 15kHz monitor, so it doesn't tolerate signals having an HSync frequency significantly above ~15.7kHz. It'd need a multisync monitor for that, although there are reports that even those don't acccept the signal.)

So I have just been using GBI-hf's 240p mode (implying Line1x) so far, which in my view still gives a very good image when line-multiplied by the OSSC (example, please watch in 720p60, note that the footage was re-encoded twice, once by me and once again by YouTube, so there are artifacts that are not part of the live HDMI signal), although the image does not fill the digital and analog screen areas to the extent achievable by differing GBI settings.

So I have just been using GBI-hf's 240p mode (implying Line1x) so far, which in my view still gives a very good image when line-multiplied by the OSSC (example, please watch in 720p60, note that the footage was re-encoded twice, once by me and once again by YouTube, so there are artifacts that are not part of the live HDMI signal), although the image does not fill the digital and analog screen areas to the extent achievable by differing GBI settings.

Last edited by 6t8k on Thu Jun 04, 2020 4:20 pm, edited 1 time in total.

-

bobrocks95

- Posts: 3624

- Joined: Mon Apr 30, 2012 2:27 am

- Location: Kentucky

Re: OSSC (DIY video digitizer & scandoubler)

Personally I haven't been able to get the OSSC to sync again, trying both hd60 and hdcustom refresh rates, so I'm unable to test if my splitter is causing issues or if my Datapath Vision likes what the OSSC is outputting.

PS1 Disc-Based Game ID BIOS patch for MemCard Pro and SD2PSX automatic VMC switching.

Re: OSSC (DIY video digitizer & scandoubler)

Sorry if this has been asked before but I couldn't find anything

Is there a full enclosure available at all for the OSSC? Something that protects the PCB rather than leaving it exposed with the stock plastic? It's a little hard to transport in its stock form, I feel like I have to baby it and constantly make sure the PCB doesn't get dusty and stuff.

Unrelated but something else I'm struggling with is death grip on component cables, especially Wii ones.

How do you guys deal with this? I obviously don't want to ruin the RCA jacks on the OSSC. I thought about getting a component switcher but the only consoles I have that connect that way are the PS2 and Wii seems like a waste of space for just 2 inputs.

Maybe a female to male RCA adapters out there? Basically to just make the jack less grippy.

Is there a full enclosure available at all for the OSSC? Something that protects the PCB rather than leaving it exposed with the stock plastic? It's a little hard to transport in its stock form, I feel like I have to baby it and constantly make sure the PCB doesn't get dusty and stuff.

Unrelated but something else I'm struggling with is death grip on component cables, especially Wii ones.

How do you guys deal with this? I obviously don't want to ruin the RCA jacks on the OSSC. I thought about getting a component switcher but the only consoles I have that connect that way are the PS2 and Wii seems like a waste of space for just 2 inputs.

Maybe a female to male RCA adapters out there? Basically to just make the jack less grippy.

Re: OSSC (DIY video digitizer & scandoubler)

I found an issue with 1080i where I need to reduce the horizontal sync width to 1.

I think something is funky with this converter.

I think something is funky with this converter.

-

bobrocks95

- Posts: 3624

- Joined: Mon Apr 30, 2012 2:27 am

- Location: Kentucky

Re: OSSC (DIY video digitizer & scandoubler)

If you know if the other user who reported an issue was using the Portta as well, I'm willing to test any cheap DAC available on Amazon. I only got the Portta for S/PDIF support, but it's not worth the cabling problems that would cause so I'm just using the analog audio. I can also try any OSSC tweaks that might be helpful.Extrems wrote:I found an issue with 1080i where I need to reduce the horizontal sync width to 1.

I think something is funky with this converter.

PS1 Disc-Based Game ID BIOS patch for MemCard Pro and SD2PSX automatic VMC switching.

Re: OSSC (DIY video digitizer & scandoubler)

They're using the one recommended by Mike Chi and RetroRGB.

I can't see why either couldn't work with the OSSC as... it clearly work otherwise.

I can't see why either couldn't work with the OSSC as... it clearly work otherwise.

-

bobrocks95

- Posts: 3624

- Joined: Mon Apr 30, 2012 2:27 am

- Location: Kentucky

Re: OSSC (DIY video digitizer & scandoubler)

If it's helpful at all, I got the OSSC to sync again, and my Datapath Vision shows no signal in OBS.

Any input marqs?

Any input marqs?

PS1 Disc-Based Game ID BIOS patch for MemCard Pro and SD2PSX automatic VMC switching.

Re: OSSC (DIY video digitizer & scandoubler)

Does this only occur with GCVideo+DAC configuration, not e.g. with official component cable? I can try gbihf-ossc.dol if it boots up to point where video mode is changed without GB Player hardware.bobrocks95 wrote:If you know if the other user who reported an issue was using the Portta as well, I'm willing to test any cheap DAC available on Amazon. I only got the Portta for S/PDIF support, but it's not worth the cabling problems that would cause so I'm just using the analog audio. I can also try any OSSC tweaks that might be helpful.

Re: OSSC (DIY video digitizer & scandoubler)

Both ends originally assumed the official component cable. GBIHF will exit without a Game Boy Player, so you'll need to apply those settings to the standard edition instead.

Re: OSSC (DIY video digitizer & scandoubler)

marqs: With just an RGB cable between the GC and the OSSC (listed as an option here), I'm getting similar (the same?) phenomena that bobrocks95 reported: depending on when I power cycle the OSSC / switch to RGBS input mode, it's also possible that the status either rapidly oscillates between ~371p, ~22.48kHz, ~60.59Hz and "NO SYNC", or between various mis-detected line-counts, as seen here. So I'm leaning towards the notion that anything in-between the GC and the OSSC is not the decisive factor.